AI Reliability Working Group.

Last updated January 31, 2025

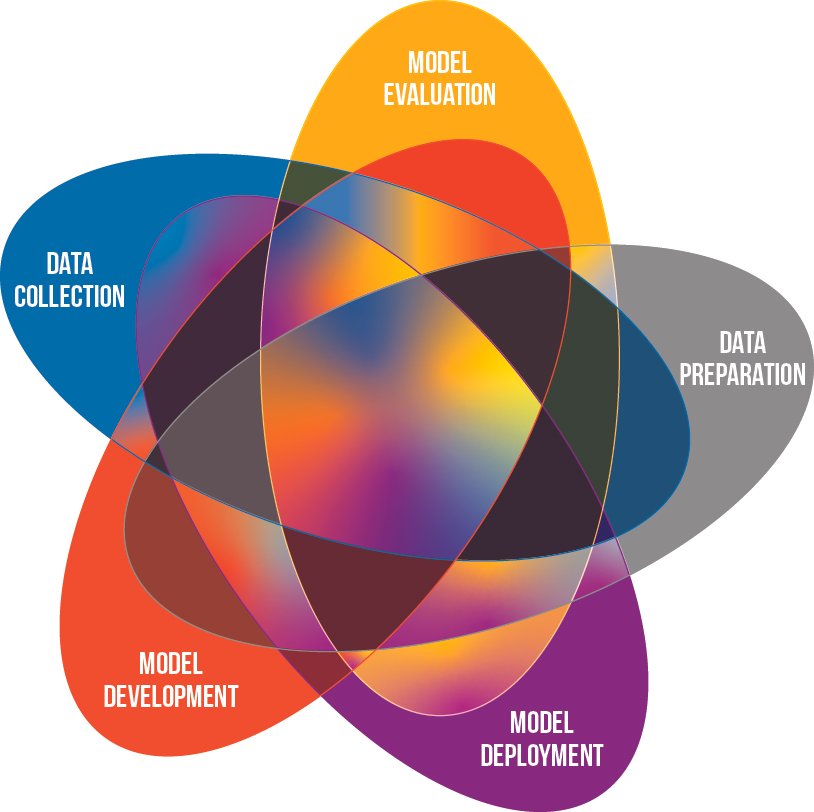

Proper data collection and curation strategies in data analysis within the MIDRC commons are critically important to yield ethical AI algorithms that produce trustworthy results for all groups. MIDRC strives to address AI reliability issues that may arise due to its intended study population, data collection, curation, and analysis.

An AI reliability tool is available to help researchers identify and mitigate issues that may arise in the AI/ML development pipeline and an open-source data representativeness exploration and comparison tool is available on GitHub.

Members:

Karen Drukker, PhD, (lead), University of Chicago, Weijie Chen, PhD, US Food and Drug Administration, Judy Gichoya, PhD, (lead), Emory University, Maryellen Giger, PhD, University of Chicago, Nick Gruszauskas, PhD, University of Chicago, Jayashree Kalpathy-Cramer, PhD, University of Colorado, Hui Li, PhD, University of Chicago, Erin Mueller, University of Chicago, Rui Carlos Pereira De Sá, PhD, NIH, Kyle Myers, PhD, Puente Solutions, Robert Tomek, University of Chicago, Heather Whitney, PhD, University of Chicago, Zi Jill Zhang, MD, University of Pennsylvania